There is a data dump from the CBS that you should be able to find with Google called "Districts and Neighborhoods" or "Wijken en Buurten". This data contains some demographic data about a region ( municipality, district and down to neighborhood ), as counted by the CBS. As well, there is a data set called "Core figures", which translated to "kerncijfers" also supplied by the CBS.

The core figures go down to the postal code level, which does two things:

- Finer grained information than you already have (which is not very useful for marketing purposes)

- Establish a correlation between postal code and the neighborhood it is in, thus allowing you to map groups of people to neighborhood level, which is probably a good aggregation level for marketing.

Then I use a query to get the polygons out that I need through the postgis query API:

String wktFilter = getFilter( bounds );

WKTReader fromText = new WKTReader();

Geometry filter = null;

try{

filter = fromText.read(wktFilter.toString());

filter.setSRID( -1 );

} catch(ParseException e){

throw new RuntimeException("Not a WKT String:" + wktFilter);

}

Session s = HibernateUtil.currentSession();

Criteria testCriteria = s.createCriteria(Buurt.class);

testCriteria.add(SpatialRestrictions.intersects("theGeom",filter));

List

As such, I get the neighborhoods or other regions back that I want, based on my Google maps viewport :). That's already an important step, but doesn't finish there. Since the data in the Wijken en Buurten is RijksDriehoeksmeting (RD), it needs to be converted to the Datum that Google Maps uses. They're using WGS-84, a datum, which is basically a geoïd generally used for (probably) older GPS's. I'm using this code for now to convert between the two:

public static double[] rdtowgs( double X, double Y ) {

double dX = (X - 155000) * Math.pow( 10 , -5 );

double dY = (Y - 463000) * Math.pow( 10 , -5 );

double SomN = (3235.65389 * dY) + (-32.58297d * Math.pow( dX, 2)) + (-0.2475d * Math.pow( dY, 2)) + (-0.84978d * Math.pow( dX, 2) * dY) + (-0.0655d * Math.pow( dY, 3)) + (-0.01709d * Math.pow( dX, 2) * Math.pow( dY, 2)) + (-0.00738d * dX) + (0.0053d * Math.pow( dX, 4)) + (-0.00039d * Math.pow( dX, 2) * Math.pow( dY, 3)) + (0.00033d * Math.pow( dX, 4) * dY) + (-0.00012d * dX * dY);

double SomE = (5260.52916 * dX) + (105.94684d * dX * dY) + (2.45656d * dX * Math.pow( dY, 2)) + (-0.81885d * Math.pow( dX, 3)) + (0.05594d * dX * Math.pow( dY, 3)) + (-0.05607d * Math.pow( dX, 3) * dY) + (0.01199d * dY) + (-0.00256d * Math.pow( dX, 3) * Math.pow( dY, 2)) + (0.00128d * dX * Math.pow( dY, 4)) + (0.00022d * Math.pow( dY, 2)) + (-0.00022d * Math.pow( dX, 2)) + (0.00026d * Math.pow( dX, 5 ));

double ret[] = new double[ 2 ];

ret[ 0 ] = 52.15517d + (SomN / 3600);

ret[ 1 ] = 5.387206d + (SomE / 3600);

return ret;

}

public static double[] wgstord( double Latitude, double Longitude ) {

double dF = 0.36d * (Latitude - 52.15517440d);

double dL = 0.36d * (Longitude - 5.38720621d);

double SomX= (190094.945d * dL) + (-11832.228d * dF * dL) + (-144.221d * Math.pow( dF, 2 ) * dL) + (-32.391d * Math.pow( dL, 3) ) + (-0.705d * dF) + (-2.340d * Math.pow( dF, 3 ) * dL) + (-0.608d * dF * Math.pow( dL, 3 )) + (-0.008d * Math.pow( dL, 2 ) ) + (0.148d * Math.pow( dF, 2 ) * Math.pow( dL, 3 ) );

double SomY = (309056.544d * dF) + (3638.893d * Math.pow( dL, 2 )) + (73.077d * Math.pow( dF, 2 ) ) + (-157.984d * dF * Math.pow( dL, 2 )) + (59.788d * Math.pow( dF, 3 ) ) + (0.433d * dL) + (-6.439d * Math.pow( dF, 2 ) * Math.pow( dL, 2 )) + (-0.032d * dF * dL) + (0.092d * Math.pow( dL, 4 )) + (-0.054d * dF * Math.pow( dL, 4 ) );

double ret[] = new double[ 2 ];

ret[ 0 ] = 155000 + SomX;

ret[ 1 ] = 463000 + SomY;

return ret;

}

So there goes that! Any data coming back from the UI needs to be converted into RD first (the bounds), before querying the data on the viewport.

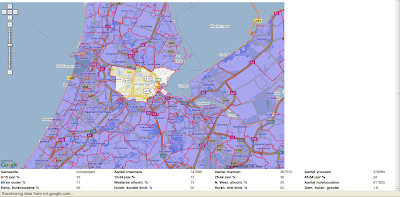

We're still working on refining things, like encoded polygons and possibly some caching, but in compiled form the app is a lot quicker than in the GWT shell. At the moment we're simply forwarding the individual points, but that's not as efficient and probably not as precise. That shouldn't take too long to get done however. Well, the rest of the application is just using the Google Map Library and putting that little map on the screen. That means using the proper events, hooks and scriptlets, to use the old MS word inbetween.

The figures are from 2004 and have been slightly updated for 2006, but probably not too much. The real figures about inhabitants are from 2009 and are based on the GBA, but some effort has been made to change things where problems could ensue regarding privacy.

What's the use? Well, together with the postal code, which is often requested, or with the customer's IP through geo targeting, you can start data mining. Marketers can quite easily develop certain profiles of what they're selling. By the IP the region can be quickly discovered, which may then give clues about preferences if the person is not known otherwise by login for example (any preferences or login that you have is far more useful than this silly method of customer targeting).

Based on the region/IP/postal code, you can find out what kind of products are more likely to suit the visitor. Thus, it provides a way to adjust the web content to the person that's visiting. Any other smaller clues like the first three clicks could theoretically tell you the rest of what the person is trying to do, or why your site is visited.

Some other sites like funda.nl use similar databases, although I believe they've probably paid around 7,000 EUR for a postal database which is slightly more precise. The CBS borders are those borders established by the government.

This little thing that you see on the page is interactive, only loads what it needs based on the viewport bounds and was hacked together in 16 hours.