http://www.realclimate.org/index.php/archives/2009/11/the-cru-hack/

http://www.realclimate.org/index.php/archives/2009/11/the-cru-hack-context/

New tool in town: KnowledgeGenes.com

16 years ago

Not hindered by any lack of knowledge, this blog aims to provide challenging thoughts on various topics.

A = U Ʃ V

[ [3,5,2,4,4],One thing I discovered (which many sites probably mention in not so readable terms :), is that when the matrix becomes relatively large, the first singular value on the diagonal approximates the average multiplied by the square root of the total numbers available in the matrix ( in the case above, the average is 3.48 ).

[3,2,5,5,3],

[4,4,5,4,3],

[2,3,4,5,2],

[1,5,5,2,2] ]

Ʃ(1) = avg * sqrt( number_of_cells )The second singular value for larger, irregular matrices is approximated by:

Ʃ(2) = sqrt( 2 * Ʃ(1) )So there certainly is a pattern being generated. Each singular value in the decomposition is actually a gain factor. So, if the matrix is really, really large, the singular values become in the orders of 1000's and the values in the first columns of U, V become 1/1000 in the case of this particular range for the Netflix data ( 1-5 across the matrix ).

sigma = mat( [ 17.76224954, 3.5139895, 3.16968094, 1.60407331, 0.73105457 ] )And here are U and V:

mw = mat( [[-0.44379232, -0.33273609 ,-0.80072792 , 0.11314317 ,-0.19587877], \Notice how in the U and V matrix, the numbers are maintained at roughly the same magnitude throughout each dimension (you need to read rows and each column further to the right is one dimension deeper). This can be done because if you follow the implementation of SVD correctly, the singular value is the gain factor. So, choosing the correct 'gain' is essential for proper functioning of the model, or you need to adjust the parameters in each dimension.

[-0.4616133 , 0.58960592 , 0.15588499 ,-0.08007446 ,-0.63919166], \

[-0.5053614 , 0.05135939 , 0.03629005 ,-0.69454331 , 0.50819749], \

[-0.41956887 , 0.28391073 , 0.09075436 , 0.69668699 , 0.49974748], \

[-0.39816248 ,-0.67705869 , 0.57007135 , 0.11412068 ,-0.21225762] ] )

cw = mat( [[-0.33638547 , 0.24667399 ,-0.32741104 ,-0.73031105 , 0.43063272], \

[-0.47365356 ,-0.80039862 ,-0.13379567 , 0.1795791 , 0.29131499], \

[-0.52873629 , 0.082443 , 0.81168849 ,-0.18044758 ,-0.14913599], \

[-0.50662787 , 0.5372694 ,-0.21592459 , 0.61450025 , 0.17445861], \

[-0.3553354 ,-0.05530591, -0.4116298 ,-0.15564457 ,-0.82280841] ] )

[[4 4 4 4 4]Whoops, what happened? We now only have 2 dimensions left, but after the third dimension all values are 0? This is because the matrix is so regular that it can be described perfectly with two factors. Hmmm.... So if there is good regularity in the data, then we need less numbers to represent the matrix! Of course, the above is taken to the extreme, but looking at recommendation systems, there are certainly regularities:

[5 5 5 5 5]

[3 3 3 3 3]

[2 2 2 2 2]

[1 1 1 1 1]]

U:

[[-0.54446573 -0.21442291 0.76382282 -0.25982498 0.08152026]

[-0.65208362 -0.46955287 -0.55122106 0.17692309 0.13842191]

[-0.3619848 0.49755586 0.15572615 0.71006801 -0.30489008]

[-0.31846257 0.36154464 -0.26944015 -0.60360543 -0.57526478]

[-0.21422565 0.59604242 -0.12602146 -0.1807017 0.74182633]]

sigma:

[ 17.71717246 1.04811144 0. 0. 0. ]

VT:

[[-0.53913501 0.50275905 0.61561055 -0.13109677 0.24577241]

[-0.40915723 -0.26153467 0.04808419 0.872681 -0.01748586]

[-0.35699995 -0.56822929 -0.1744093 -0.31507809 0.64805378]

[-0.37793419 -0.44513203 0.23939904 -0.33777929 -0.69829541]

[-0.52119151 0.39724791 -0.72868447 -0.08872685 -0.17804491]]

Aij = Ri1 * Cj1 * Ʃ1In practice, for slightly larger matrices and those that are totally random, the root mean square error of each estimation vs. the real value will be around 1.41 or so. Of course, this is a function of the range used in the actual matrix A. It also shows that if the matrix is highly irregular, you're not converging very quickly to an error rate where your model becomes useful for making nice approximations.

-Wall -march=core2 -mtune=core2 -O2These show that the binaries to be built are tuned specifically to my processor that I am running, instead of generic "i386" architectures. I'm also no expert on Python, so there may be many ways to totally optimize python such that it runs much better. The flags above will generate code that is much more efficient and reduces a single cycle of 27 seconds on generic i386 architectures to 7 secs in total.

my2DArray[ ROWS ][ COLS ];When cycling through this loop, you'll want to do this by rows first, then cycle on the columns. The columns are probably linearly aligned in memory, one after the other. So you'd typically iterate through this array through:

for i = 0 to ROWS:Compare that to:

for j = 0 to COLS:

my2DArray[ i ][ j ]

for j = 0 to COLS:The second has cache misses all over the place, which is very bad for performance. Ideally, for computational tasks you find an algorithm that both keeps data close in the processor cache as long as possible, but of course only if that is feasible.

for i = 0 to ROWS:

my2DArray[ i ][ j ]

from scipy import linalg, mat, dot;This code prints the following:

matrix = mat( [[2,1,0,0], [4,3,0,0]] );

print "Original matrix:"

print matrix

U, s, V = linalg.svd( matrix )

print "U:"

print U

print "sigma:"

print s

print "VT:"

print V

dimensions = 1

rows,cols = matrix.shape

#Dimension reduction, build SIGMA'

for index in xrange(dimensions, rows):

s[index]=0

print "reduced sigma:"

print s

#Reconstruct MATRIX'

reconstructedMatrix= dot(dot(U,linalg.diagsvd(s,len(matrix),len(V))),V)

#Print transform

print "reconstructed:"

print reconstructedMatrix

Original matrix:And with one more dimension for sigma:

[[2 1 0 0]

[4 3 0 0]]

U:

[[-0.40455358 -0.9145143 ]

[-0.9145143 0.40455358]]

sigma:

[ 5.4649857 0.36596619]

VT:

[[-0.81741556 -0.57604844 0. 0. ]

[-0.57604844 0.81741556 0. 0. ]

[ 0. 0. 1. 0. ]

[ 0. 0. 0. 1. ]]

reduced sigma:

[ 5.4649857 0. ]

reconstructed:

[[ 1.80720735 1.27357371 0. 0. ]

[ 4.08528566 2.87897923 0. 0. ]]

reduced sigma:This is how you can use Python for quick tests and experiments on SVD.

[ 5.4649857 0.36596619]

reconstructed:

[[ 2. 1. 0. 0.]

[ 4. 3. 0. 0.]]

Thought... what is it? I've posted before on the topic of consciousness and thought. Without any final conclusion, the topic of thought is discussed in philosophy with differing opinions on the matter. Some say that thought has mystic properties and is only reproducible in biological matters, some in that camp go as far to state that thought is purely human and is what separates us from animals. Could be, since there surely are specific differences in the way we learn, reason and behave, even compared to monkeys. See "Ape Genius" here for example. The last video talks about a very important difference, namely pointing. The questions posed in the research is whether apes are more or less thinking like us or share specific traits that make them behave like us. Looking at the videos and specifically the parts about cooperation and learning, I have personally come to the conclusion that there is not that much in common (the problem is that in a way, apes look more like us than, say, horses, so we're inclined to believe they think like us for that reason. But are they really the same once you completely ignore the 'look-alike'? ). Back to the question at hand... there are other streams in philosophy that believe thought is computational. Then there are once again subdivisions in that region. Some say that the mind is partly computational, but has other traits that are incredibly hard to model and execute on a computer for example.

Thought... what is it? I've posted before on the topic of consciousness and thought. Without any final conclusion, the topic of thought is discussed in philosophy with differing opinions on the matter. Some say that thought has mystic properties and is only reproducible in biological matters, some in that camp go as far to state that thought is purely human and is what separates us from animals. Could be, since there surely are specific differences in the way we learn, reason and behave, even compared to monkeys. See "Ape Genius" here for example. The last video talks about a very important difference, namely pointing. The questions posed in the research is whether apes are more or less thinking like us or share specific traits that make them behave like us. Looking at the videos and specifically the parts about cooperation and learning, I have personally come to the conclusion that there is not that much in common (the problem is that in a way, apes look more like us than, say, horses, so we're inclined to believe they think like us for that reason. But are they really the same once you completely ignore the 'look-alike'? ). Back to the question at hand... there are other streams in philosophy that believe thought is computational. Then there are once again subdivisions in that region. Some say that the mind is partly computational, but has other traits that are incredibly hard to model and execute on a computer for example. I've updated my Linux system to Karmic Koala over the weekend. It seems to work quite well. For the first time, I decided to kill all the binaries that somehow made it to my machine over a course of 2 years and do a fresh binaries install, keeping my home mount with data. That worked out well and the machine even booted my RAID-0 array with dmraid without any problems this time. Ubuntu 9.10 works like a treat, you should try it!

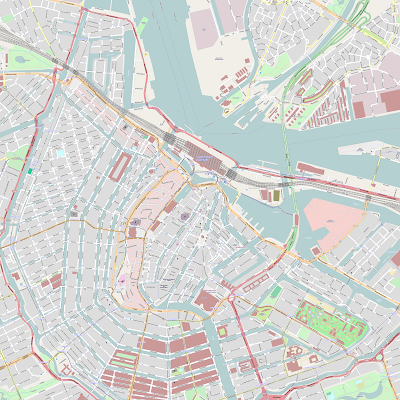

I've updated my Linux system to Karmic Koala over the weekend. It seems to work quite well. For the first time, I decided to kill all the binaries that somehow made it to my machine over a course of 2 years and do a fresh binaries install, keeping my home mount with data. That worked out well and the machine even booted my RAID-0 array with dmraid without any problems this time. Ubuntu 9.10 works like a treat, you should try it! In this tutorial, they end up building a little world map, which I attempted and worked out, as you can see. The world map on the right was constructed by the information of SHAPE files on my server. The overview is a very generic image, but the information in the SHAPE file is so great, that I can zoom in to great extents and produce the complete coastline of Antarctica or any country in Europe. Thanks to the efforts of the Openstreetmaps project that is and people and organizations that collaborate with them. Notice also how the world seems to appear slightly distorted. I think this is related to the chosen projection method.

In this tutorial, they end up building a little world map, which I attempted and worked out, as you can see. The world map on the right was constructed by the information of SHAPE files on my server. The overview is a very generic image, but the information in the SHAPE file is so great, that I can zoom in to great extents and produce the complete coastline of Antarctica or any country in Europe. Thanks to the efforts of the Openstreetmaps project that is and people and organizations that collaborate with them. Notice also how the world seems to appear slightly distorted. I think this is related to the chosen projection method. So this is great. I can now produce very detailed streetmaps of any region in Holland, reason about those places and ways through a database with spatial reasoning predicates, find out extents of regions, and so forth. Mapnik also provides scripts to generate tiles from a given 'planet' database. Tiling a country however can produce a very large amount of data on your PC, so use with care :). The above image was produced using standard styling rules. It is possible to adjust this styling or replace it entirely, such that it becomes more personal. These generated images, together with a bit of JavaScript and the original PostGis database as a backup to the locations of points of interest are at the core of Google Maps.

So this is great. I can now produce very detailed streetmaps of any region in Holland, reason about those places and ways through a database with spatial reasoning predicates, find out extents of regions, and so forth. Mapnik also provides scripts to generate tiles from a given 'planet' database. Tiling a country however can produce a very large amount of data on your PC, so use with care :). The above image was produced using standard styling rules. It is possible to adjust this styling or replace it entirely, such that it becomes more personal. These generated images, together with a bit of JavaScript and the original PostGis database as a backup to the locations of points of interest are at the core of Google Maps.